How Social Media Detects Mental Health Crises

Your phone knows you’re struggling before you do. It’s a reality that feels like science fiction, but it’s happening right now across social media platforms worldwide.

Every day, algorithms analyze billions of posts, comments, and interactions to identify users who may be in mental health crisis.

Computer scientists are now using quantitative techniques to predict the presence of specific mental disorders and symptoms, such as depression, suicidal thoughts, and anxiety. The technology has become remarkably sophisticated, tracking everything from the language you use to the time you spend scrolling.

The Science Behind the Surveillance

In this study, Artificial Neural Network (ANN) models (Artificial Neural Network (ANN) models are computer systems inspired by how the human brain works, designed to recognize patterns and make predictions from data) were constructed to predict suicide risk from everyday language of social media users. The dataset included 83,292 postings authored by 1,002 authenticated Facebook users, alongside valid psychosocial information about the users. These systems don’t just look at what you post; they analyze how long you pause before typing, how often you delete and retype messages, and subtle shifts in your language patterns.

Facebook has started using artificial intelligence to provide help to people who could be at risk of suicide. The platform’s AI systems can detect crisis states with concerning accuracy, monitoring for signs that human moderators might miss. The model was trained on a diverse dataset of 996,452 social media posts in multiple languages (English, Spanish, Mandarin, and Arabic) collected from Twitter, Reddit, and Facebook over 12 months.

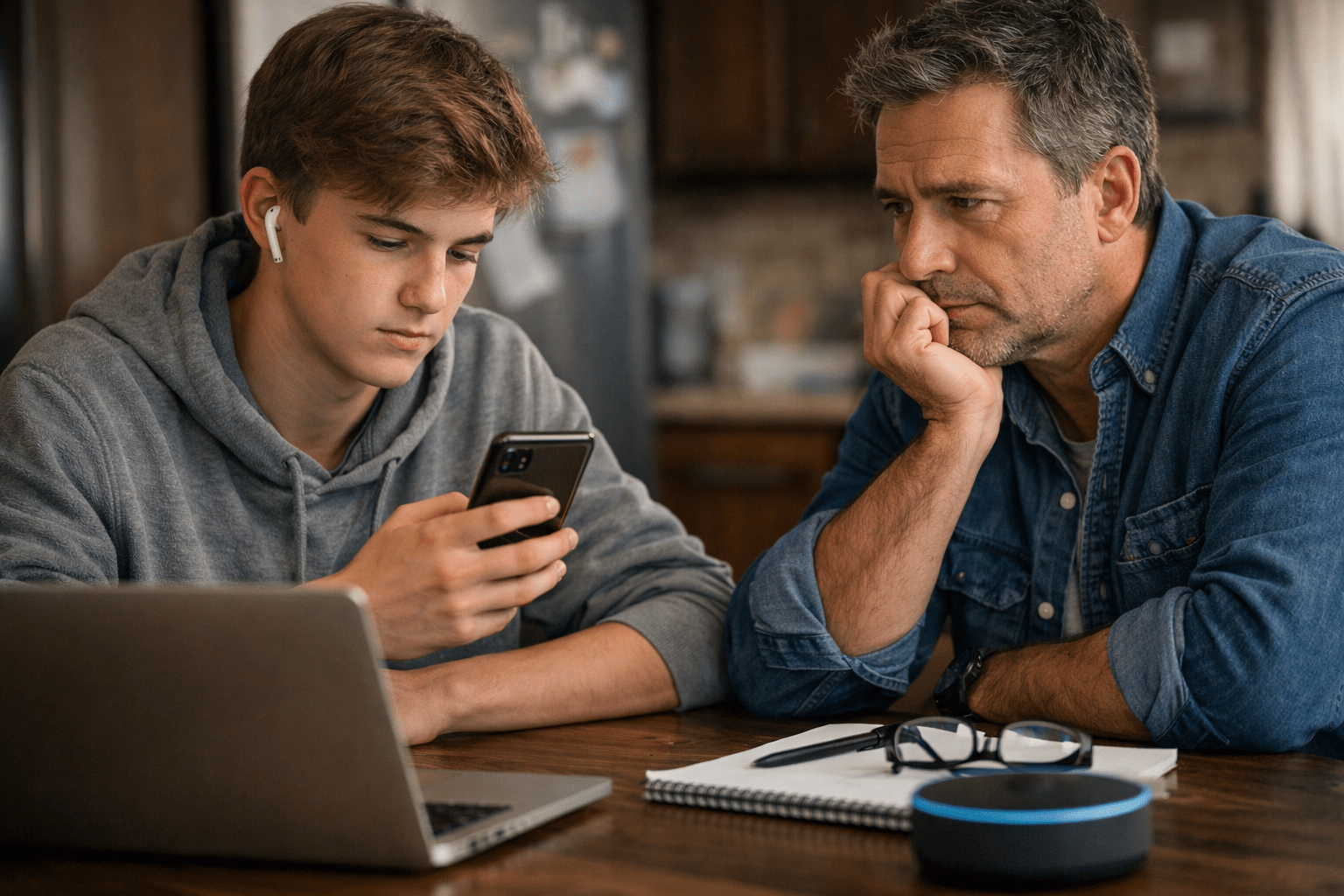

The Algorithmic Impact on Youth

The implications are particularly significant for young people. According to a research study of American teens ages 12-15, those who used social media over three hours each day faced twice the risk of having negative mental health outcomes, including depression and anxiety symptoms.

TikTok algorithms fed adolescents tens of thousands of weight-loss videos within just a few weeks of joining the platform when researchers created automated accounts registered as 13-year-olds.

Not Everyone Is Online

While algorithms can scan millions of posts for signs of distress, many people never share their mental health struggles online or do not use social media at all. Some individuals may:

- Prefer in-person conversations or private communications.

- Lack easy access to the internet or digital devices.

- Face language, cultural, or accessibility barriers that keep them offline.

- Experience stigma, privacy concerns, or fear of judgment that discourage posting about personal struggles.

This creates a gap: people struggling in silence, or those outside the digital world, may not benefit from the advances of AI-driven detection.

It’s important for mental health professionals and communities to remember that relying solely on social media signals can leave some individuals invisible to these technologies. In fact, studies point out that machine learning models built from social media posts risk missing those who do not or cannot participate in digital spaces.

What’s more, digital clues or “proxies” for mental health don’t always reflect what people are truly experiencing in real life or how they might be diagnosed in a clinical setting.

Offline outreach, community engagement, and traditional mental health services remain essential. Friends, families, educators, and health providers should continue to watch for signs of distress and offer support, especially to those who may not be digitally connected.

The Ethical Dilemma

While these systems may help save lives, they also bring up some serious concerns – especially around privacy, fairness, and accuracy.

One big issue is bias: when the data used to train an algorithm is limited or not diverse, the system might miss warning signs in some people or wrongly flag others. For example, a tool built mostly on posts from English-speaking users might not work as well for people who use other languages. This means some people could be unfairly overlooked, while others might be flagged unnecessarily.

Because of this, companies like Meta (which owns Facebook and Instagram) clearly state that their algorithms are only meant to help spot posts that might signal someone is at risk. They are not designed to diagnose or treat mental health conditions – something that should always involve a real person and a full understanding of someone’s situation.

In short: while technology can help identify when someone needs support, it cannot—and should not—replace mental health professionals or proper care.

Privacy vs. Prevention

The tension between privacy and prevention continues to intensify. Suicide remains a problem of public health importance worldwide. With growing evidence linking social media use and suicide, social media platforms such as Facebook have developed automated algorithms to detect suicidal behaviour. However, this raises important questions about the cost to our digital privacy.

The scale of the issue is hard to ignore. For example, the 988 suicide prevention hotline in Canada receives approximately 1,000 calls and texts every day. This number represents only those who reach out for help; many more may be struggling quietly, without contacting anyone—either online or offline. It is a sobering reminder that digital tools and crisis hotlines are vital but cannot reach everyone who needs support.

Both technology-based interventions and broader, offline support systems are essential. No single approach can address every individual’s needs, and ongoing attention to privacy and human connection will always be an important part of mental health care.

Moving Forward

Algorithms can improve digital-media platforms in two ways: by using different optimization metrics to rank content, or by prompting interventions upon detecting problematic content. The challenge lies in balancing technological intervention with human agency and privacy rights.

As we navigate this new reality, we must ask ourselves: Are we building systems that genuinely help people in crisis, or are we creating a digital surveillance state under the guise of mental health protection? The answer may determine the future of both social media and mental health care.

Remember:

Technology can be a powerful tool—but it will never replace the need for human connection, traditional support systems, and offline care, especially for people who remain outside the digital world or choose not to share their struggles online.

Getting Help

If you are in crisis, please call 911 or go to your nearest emergency centre right away. For crisis contacts and additional resources, visit our emergency page.

If you or someone you know is struggling with mental health challenges, remember you’re not alone and help is available. If you’d like guidance in finding the right therapist, consider booking a free 15-minute call with us to get started.

Sources

- Artificial Neural Network – ANN Model Study

- NPR – Facebook Increasingly Reliant on A.I. To Predict Suicide Risk (2018)

- CNBC – How Facebook uses AI for suicide prevention (2018)

- Nature Digital Medicine: Methods in predictive techniques for mental health status on social media – https://www.nature.com/articles/s41746-020-0233-7

- Scientific Reports: Deep neural networks detect suicide risk from textual Facebook posts – https://pubmed.ncbi.nlm.nih.gov/33028921/

- Yale Medicine: How Social Media Affects Your Teen’s Mental Health (2024)

- MDPI: Early Detection of Mental Health Crises through AI-Powered Social Media Analysis

- Journal of Medical Internet Research: AI for Analyzing Mental Health Disorders Among Social Media Users