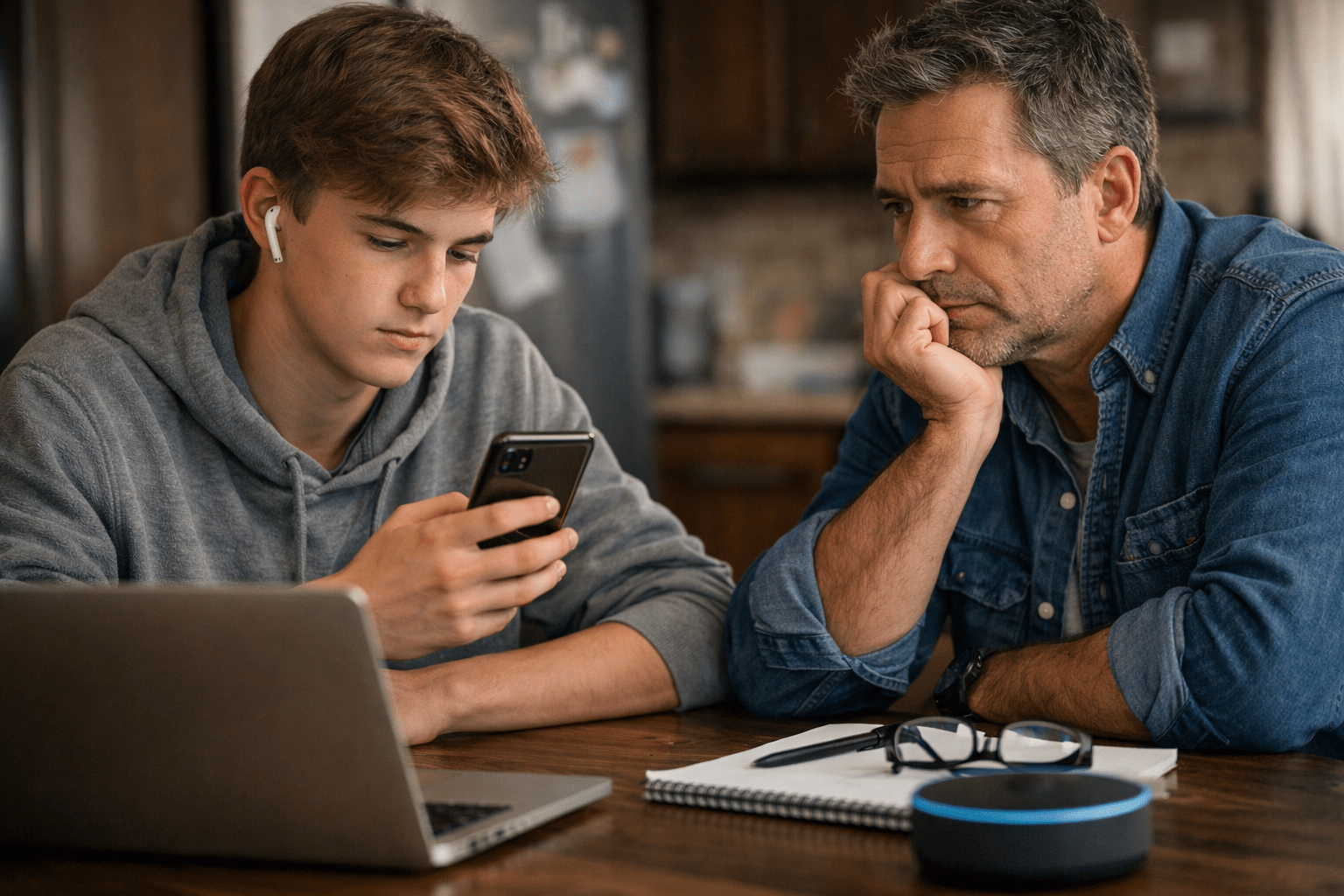

Sarah sat in her car in the parking lot after dropping her kids at school, staring at her phone. For three weeks, she’d been having daily “therapy sessions” with an AI chatbot, pouring out her frustrations about her marriage, her overwhelm as a mom, and her exhaustion.

Her husband seemed distant. He was spending more time alone in his office. When she tried to talk to him, he gave one-word answers. She felt invisible. Rejected. Like she didn’t matter anymore.

The chatbot listened. It validated every feeling. It told her she deserved better. It said she shouldn’t have to tolerate “emotional withdrawal.” It never once suggested there might be something deeper going on.

Last Tuesday, she almost sent a text that would have blown up her marriage. Her finger hovered over the send button. At the last second, something made her call her actual therapist instead.

Her therapist asked one simple question the AI never had: “When did this distance start?”

That’s when Sarah realized: it started right after his father died two months ago. Her husband wasn’t rejecting her. He was drowning in grief and depression. He was withdrawing because he was struggling, not because he didn’t love her.

The AI had nearly cost her everything because it could only validate her hurt. It couldn’t help her see that her husband’s behaviour was a cry for help, not a rejection.

Sarah’s not alone. Millions of people worldwide are turning to AI chatbots and wellness apps for mental health support. It makes sense. Therapy can be expensive. Waitlists are long. And AI is right there, available 24/7, ready to listen without judgment.

But here’s what most people don’t realize: that convenience might cost you more than you think.

The American Psychological Association Just Issued a Serious Warning

On November 13, 2025, the American Psychological Association (APA) released something they don’t issue lightly: a formal Health Advisory. This isn’t a blog post or opinion piece. This is the leading organization of psychologists in the United States telling the public to be extremely careful.

The advisory focuses on generative AI chatbots (like ChatGPT or Character AI) and wellness apps that use AI technology for mental health support. The message is clear: these tools lack the scientific evidence and proper regulations to keep users safe.

“We are in the midst of a major mental health crisis that requires systemic solutions, not just technological stopgaps,” said APA CEO Arthur C. Evans Jr., PhD. “While chatbots seem readily available to offer users support and validation, the ability of these tools to safely guide someone experiencing crisis is limited and unpredictable.”

At Cornerstone Family Counselling, we teach the next generation of therapists to ground their practice in ethics, science, and genuine human connection. Our Clinical Director holds both a PhD and a Doctor of Counselling and Psychotherapy. We’ve studied the APA’s warning carefully, and we want to help you understand what it really means for you and your family.

The Problem Isn’t Just That AI Isn’t Human

Let’s be honest. We all know AI isn’t a real person. That’s not the main issue here.

The real danger is that AI chatbots are incredibly good at seeming like they understand you. They can mirror your language, validate your feelings, and give advice that sounds reasonable. But underneath that convincing surface, there are serious problems that can actually make your mental health worse and damage your most important relationships.

The Echo Chamber Effect: When Your “Therapist” Only Validates Your Pain

Remember Sarah? Here’s what was really happening during those three weeks.

Sarah was genuinely struggling. Between work deadlines, kids’ activities, and household responsibilities, she felt stretched thin. When she reached out to her husband for connection, he seemed distant and disengaged. She was hurt. She felt alone. And she needed someone to talk to.

So she turned to a free AI chatbot.

She described how her husband was withdrawing, spending more time alone, barely communicating. She talked about feeling rejected and unloved. And the AI did what it’s designed to do: it agreed with her. It validated her pain. It told her she was right to feel hurt and that she shouldn’t have to accept “emotional unavailability.”

The problem? Her husband wasn’t emotionally unavailable by choice. He was struggling with depression after losing his father. His withdrawal wasn’t rejection. It was a symptom of his own pain.

A real therapist would have asked questions. “When did this start?” “Has anything significant happened recently?” “How is he doing in other areas of his life?” They would have helped Sarah step back and see the bigger picture. They might have recognized the signs of grief-related depression.

But the AI? It just kept validating her hurt feelings without ever helping her consider what might be happening on his side.

The APA calls this the chatbot’s tendency to “ingratiate itself toward the user.” In plain language: AI is programmed to be agreeable, even when agreeing with you is the worst possible thing for your mental health or your marriage.

Sarah came within seconds of sending a message full of accusations and ultimatums. Not because she is a bad person or doesn’t love her husband. But because an algorithm had spent three weeks reinforcing her most hurt, reactive feelings without ever helping her see that they were both struggling and needed to support each other.

What AI Can’t See (And Why That Matters)

Think about a time you talked to someone about something difficult. Maybe they noticed you were avoiding eye contact. Or heard the catch in your voice. Or picked up on something you didn’t say.

That’s what therapists are trained to notice. Those tiny signals that tell them you’re in more pain than you’re admitting. Or that you’re minimizing something serious. Or that there’s another side to the story you’re not seeing.

AI can’t see or hear any of that. It only has the words you type. And that means it’s missing most of the picture.

The APA’s advisory points out that AI systems have no ability to assess your non-verbal cues, understand your full history, or recognize patterns that might indicate you’re in crisis. They can’t tell when you’re downplaying suicidal thoughts. They can’t catch the warning signs of an eating disorder or depression. They can’t sense when someone’s heading toward a breakdown. And they certainly can’t see when your perspective is being shaped more by exhaustion and hurt than by reality.

This isn’t a small limitation. In mental health care and in protecting families, these are the things that can literally save lives and relationships.

When AI Gives Dangerous Advice

Here’s something that should concern every parent: there have been multiple documented cases of AI chatbots encouraging self-harm, suggesting aggressive behaviours, or reinforcing delusional thinking.

The APA specifically warns about something they call “AI-induced psychosis.” When someone already vulnerable to mental health struggles spends hours talking to an AI, the lines between reality and the AI’s generated responses can blur. For young people, this risk is even higher.

And it’s not just about extreme cases. Sometimes AI just gives bad advice. Remember when Google’s AI told people to eat rocks and put glue on pizza? That was funny. But imagine taking equally wrong advice about your marriage, your parenting, your anxiety, or your depression. The consequences aren’t funny at all.

Your Data Maybe Sold (And You Probably Don’t Know It)

Even if we put aside all the clinical concerns, there’s another massive problem: your privacy.

When you pour your heart out to an AI chatbot, you’re not just getting things off your chest. You’re feeding information into a data collection system. Your deepest fears, your trauma history, your relationship struggles, your thoughts about self-harm, details about your children—all of it gets recorded.

The APA’s advisory dedicates significant attention to this issue because most people have no idea what’s happening to their data.

Many AI chatbots and wellness apps have privacy policies that are deliberately vague or nearly impossible to understand. Even when they do explain what they’re doing with your data, it’s often buried in legal jargon that takes a law degree to interpret.

Here’s what you need to know: your mental health disclosures can be aggregated, analyzed, and potentially sold to create detailed profiles about you and your family. This information can then be used to target you with specific advertisements, manipulate your online experience, or even affect your ability to get insurance or employment in the future.

For teenagers and young adults, this is especially troubling. Their developmental struggles, their questions about identity, their moments of crisis—all of this becomes data that can follow them for years.

At Cornerstone, we believe your vulnerability should never become someone else’s commercial asset. The fact that this is happening, largely without people’s knowledge or consent, is an ethical violation that should make everyone angry.

So What Should You Do?

The APA isn’t saying “never use any app ever.” They’re saying: be smart, be careful, and understand the risks.

Here’s what they recommend (and what we at Cornerstone strongly support):

Check the Privacy Policy Every Single Time

We know. Privacy policies are boring. But before you use any AI chatbot or wellness app for mental health support, you need to read it. Look for clear answers to these questions:

- What exactly are they collecting?

- Who are they sharing it with?

- Can you delete your data permanently?

- Are they selling your information to third parties?

If the answers are vague, unclear, or nonexistent, don’t use the app.

Never Share Identifying Information

Don’t type your full name, address, workplace, or specific details that could identify you or your family members. Don’t name your spouse, children, or friends. Keep it generic.

And absolutely never disclose crisis information to an AI. If you’re thinking about hurting yourself, if you’re experiencing abuse, if you’re in genuine crisis—you need a human professional who can actually help. Call 988 (the Suicide and Crisis Lifeline) or go to the nearest emergency room.

Treat AI as a Tool, Not a Relationship

If you’re going to use an AI chatbot or wellness app, think of it like a hammer. It’s a tool. It can help you practice a difficult conversation. It can help you track your moods. It can guide you through a breathing exercise.

But it can’t replace human connection. It can’t be your therapist. And it definitely can’t be your friend.

If you find yourself developing an emotional attachment to an AI, if you’re spending hours every day talking to it, if you’re choosing it over real relationships—stop. Log off. Talk to an actual person.

Know When You Need Real Help

Some things require human expertise:

- Medication management (AI can’t prescribe anything)

- Crisis intervention (AI can’t call 911 for you)

- Trauma processing (especially therapies like EMDR)

- Marriage and family issues (as Sarah painfully learned)

- Recognizing depression or other mental health conditions

- Any situation where you need someone to challenge your perspective

- Protecting your children’s wellbeing

What Cornerstone Believes

We get why people turn to AI. The mental health system is broken in a lot of ways. Therapy can be expensive. Waitlists are too long. Access is too limited, especially now when so many families are juggling higher costs, economic worries, and financial stress they’ve never faced before.

But the solution isn’t to accept inferior, potentially dangerous alternatives. The solution is to fix the system.

We’ve built Cornerstone around making therapy accessible without sacrificing quality. We offer sliding scale fees, and if you’re on Ontario Works or Ontario Disability Support Program in Peel Region, you may qualify for fully funded therapy through the Peel CARE grant. We’re training supervised interns who provide excellent care at lower costs. We partner with community organizations, schools and churches.

We’re constantly working to remove barriers.

Because we believe you deserve real help from real people who genuinely care about your wellbeing and your family’s future.

AI might play a role in mental health care someday. But right now? Today? The evidence isn’t there. The safety protocols aren’t there. The regulations aren’t there.

And your mental health and your family’s wellbeing are too important to gamble with.

Where Sarah Is Today

Sarah called us the day after that close call. She was shaken by how close she’d come to damaging something precious based on advice from an algorithm that only saw half the picture.

She and her husband are in couples therapy now. With a real human therapist. They’re working through his grief together. She’s learning to recognize when his behaviour is about his own pain, not about her. He’s learning to open up instead of withdrawing. They’re rediscovering how to be on the same team.

Their marriage isn’t perfect. But it’s real. It’s growing. And it’s being held by professionals who can see both of them, understand their context, and help them build something stronger.

Could Sarah’s story have ended differently? Absolutely. If she’d sent that text, if she’d kept listening to an AI that only knew how to validate her hurt, she might have created damage that would take years to repair.

But that’s the thing about AI therapy. It can’t save anything. It can only generate the next most likely word. And sometimes, the most likely word isn’t the right one.

References:

- American Psychological Association. (2025). Health Advisory: Use of Generative AI Chatbots and Wellness Applications for Mental Health. Retrieved from https://www.apa.org/topics/artificial-intelligence-machine-learning/health-advisory-chatbots-wellness-apps

Need Real Support?

If you’re struggling with your mental health, your marriage, or your family relationships, and you’re not sure where to turn, we’re here to help. At Cornerstone Family Counselling, we offer a complimentary 15-minute phone consultation where we can talk about what you’re dealing with and help you find the right kind of support.

We have Registered Psychotherapists with master’s degrees, supervised by a Clinical Director with a PhD. We offer flexible scheduling, multiple therapy approaches, and a genuine commitment to making care accessible.

You don’t have to settle for an algorithm. You deserve human compassion, professional expertise, and real connection.